skerch: Sketched Linear Operations for PyTorch

| GitHub | PyPI | Docs | CI | Tests |

|---|---|---|---|---|

|

|

|

|

|

Fast and scalable numerical linear algebra for everyone

In many computational fields we often have to run computations on large or slow linear objects (such as matrices). But very often, those objects admit a much smaller representation (such as low-rank).

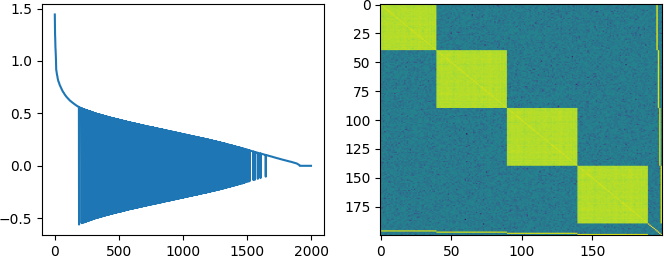

Through the magic of randomized linear algebra, sketched methods allow us to directly obtain the smaller representation, without having to look at the whole thing! This can lead to very substantial gains in speed and scale at minimal or no cost in accuracy, e.g. here is an exact eigendecomposition of a 40000x40000 Deep Learning Hessian, which runs in ~30 seconds on a CPU:

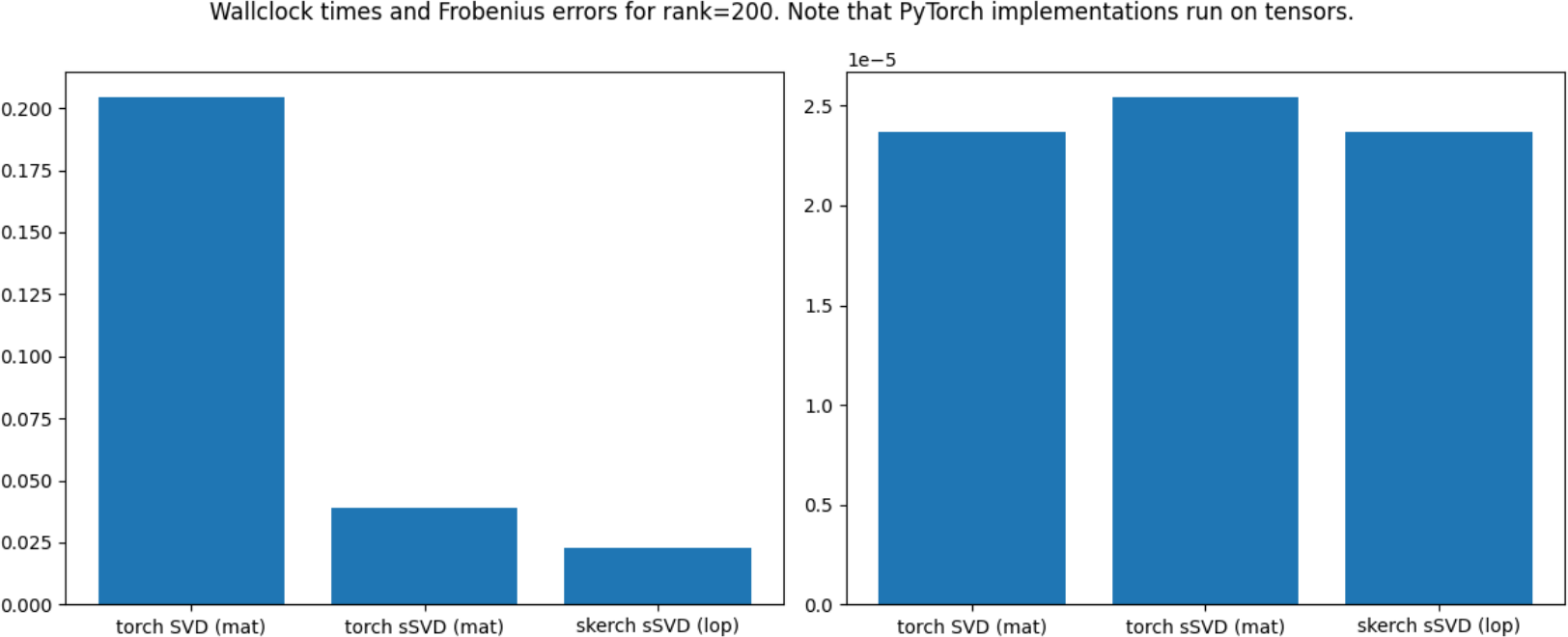

And here is a head-to-head comparison between skerch.algorithms.ssvd and the PyTorch counterparts, torch.linalg.svd and torch.svd_lowrank:

skerch delivers sketched methods to your doorstep with full power and flexibility, such as SVD/EIGH, diagonal/triangular approximations and norm estimations.

- Built on top of PyTorch, naturally supports CPU and CUDA, as well as complex datatypes. Very few dependencies otherwise

- Efficient parallelized and distributed computations, and support for out-of-core operations via HDF5

- Modular and extendible design. Many choices for sources of sketch noise and algorithms, and easy adaption to new settings and operations

- A-posteriori verification tools to test accuracy of sketched approximations

All you need to do is to provide an object that satisfies this simple interface, and skerch will do the rest:

class MyLinOp:

def __init__(self, shape):

self.shape = shape # (height, width)

def __matmul__(self, x):

return "... implement A @ x ..."

def __rmatmul__(self, x):

return "... implement x @ A ..."

Version 1.0 is the first major release, with lots of good stuff:

- Better test coverage -> less bugs

- Clearer and more comprehensive docs

- support for complex datatypes

- Support for (approximately) low-rank plus diagonal synthetic matrices

- Linop API:

- New core functionality: Transposed, Signed Sum, Banded, ByBlock

- Support for parallelization of matrix-matrix products

- New measurement noise linops: Rademacher, Gaussian, Phase, SSRFT

- Data API:

- Batched support for arbitrary tensors in distributed HDF5 arrays

- Modular and extendible HDF5 layouts

- Sketching API:

- Modular measurement API supporting multiprocessing and HDF5

- Modular recovery methods (hmt, singlepass, Nystrom, oversampled)

- Algorithm API:

- Algorithms: Hutch++ XDiag, SSVD, Triangular, SNorm (also Hermitian)

- Modular and extendible design for noise sources and recovery types

- Matrix-free a-posteriori error verification and rank estimation

Interested?

Check the example gallery and tutorials for quick and direct ways to get started:

- Installation:

pip install skerch - Examples and tutorials here

Here’s to a skerched earth! 🍾 🌍